Before we get started, the developers of Pocket Anatomy would like to acknowledge the contributions from the following sources, without which this project would not have been possible.

Visible Human DataBase

US National Library of Medicine.

Permission granted in March 2012.

BodyParts 3D Database

Database Center for Life Science, University of Tokyo.

Permission granted in September 2016.

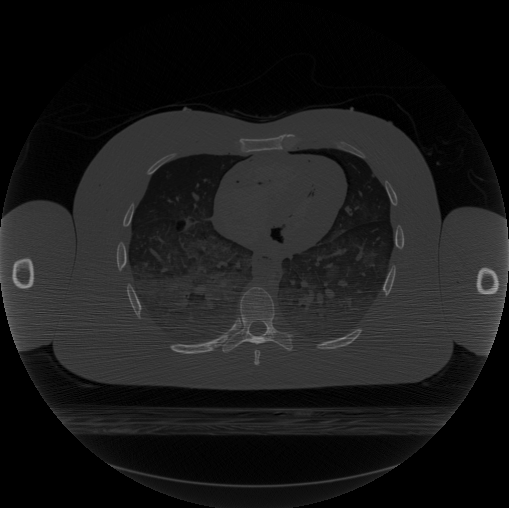

Figure 1: Example of CT image from Visible Human Data Set.

Figure 1: Example of CT image from Visible Human Data Set.

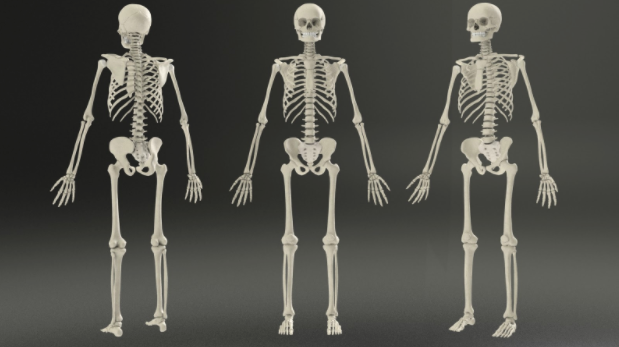

The skeletal system was built with aid from the visible human database. The Visible Human Project is a data set of images of the human body that facilitates anatomy visualisation applications. The dataset consists of cross-sectional cryogenic images, MRI and CT scans of a human body. The Visible Human Project is run by the U.S. National Library of Medicine (NLM) and it is free to obtain the data on the NLM website though the user must sign a license agreement and specify their intended use of the data. In the creation of the skeleton the CT data from the visible human project was used.

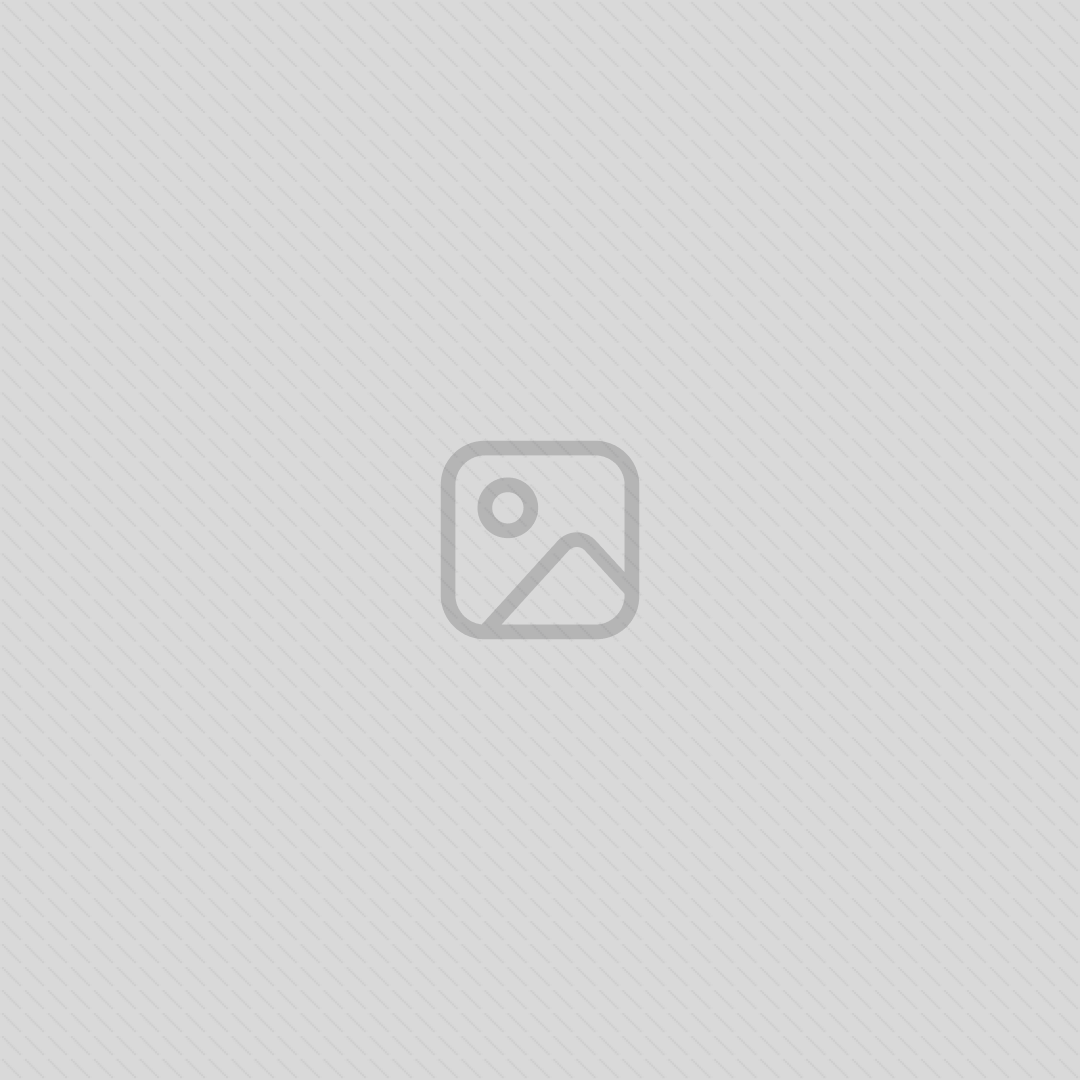

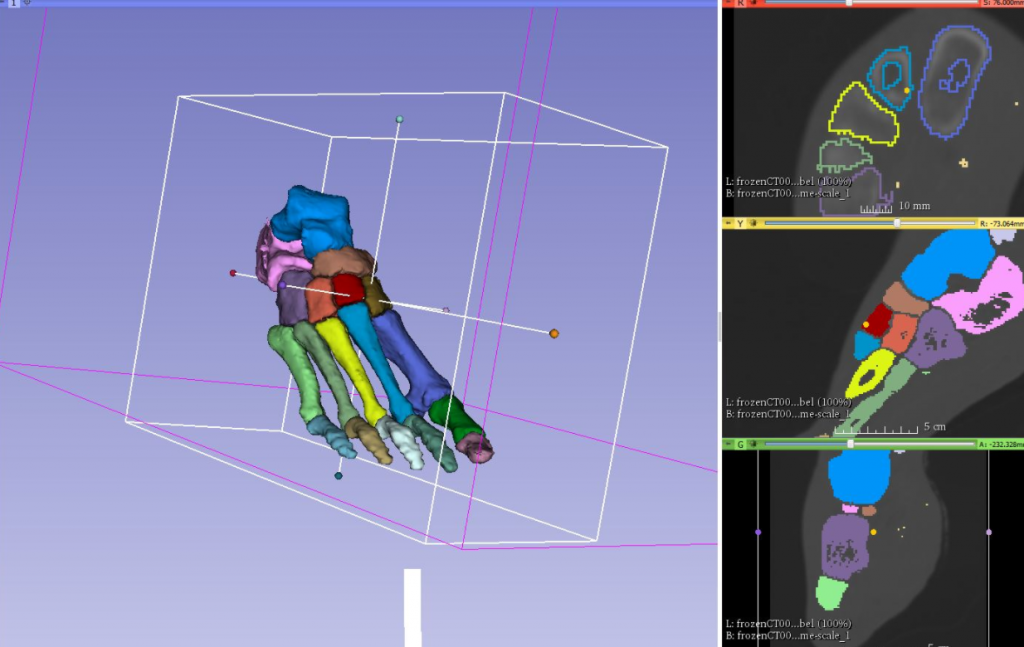

Figure 1.1: Labelling and Segmenting in the 3dSlicer Software Application.

Figure 1.1: Labelling and Segmenting in the 3dSlicer Software Application.

The image data is loaded in 3dslicer which again is a free and open source software package for image analysis and scientific visualisation. cropped to contain the area in which you wish to build a model of. A label map is then created on the area of interest using the ‘editor’ tools in 3dSlicer.

A model is then built from the labelled area using the ‘model maker’ option. This model can then be saved as an .Stl file which can be opened in external programs.

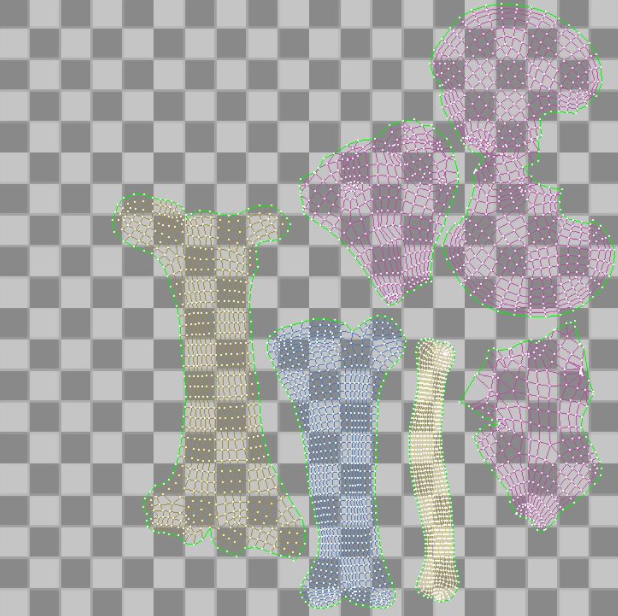

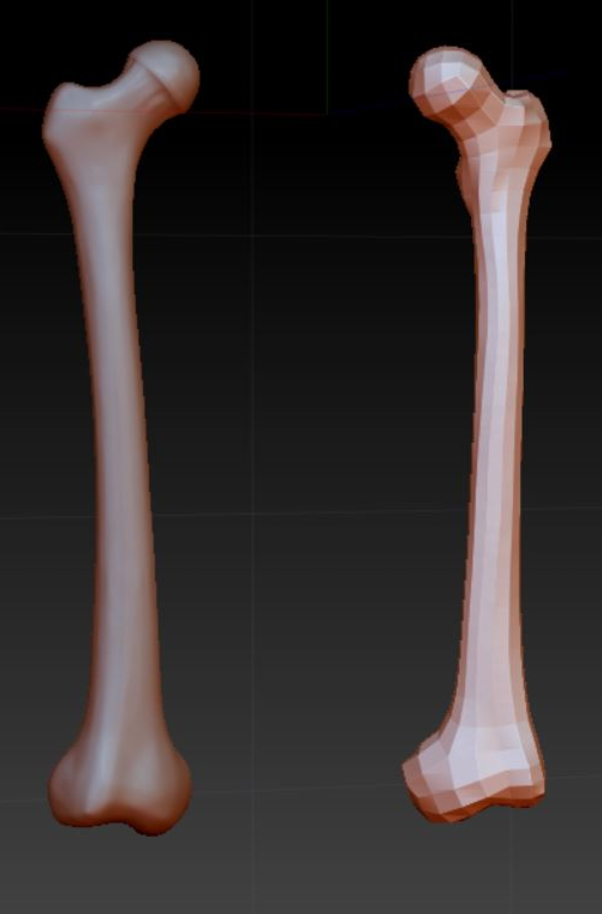

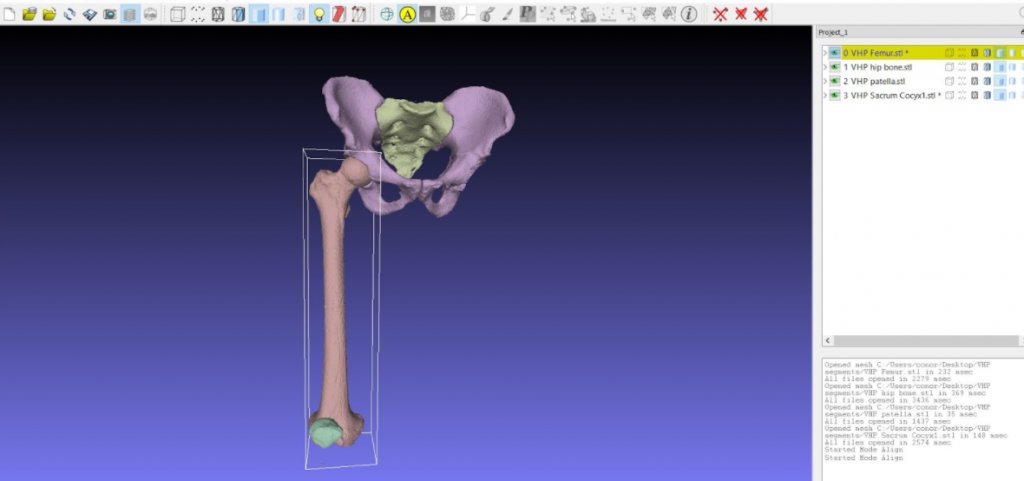

Figure 1.1: Tidying the meshes in the MeshLab Software Application.

Figure 1.1: Tidying the meshes in the MeshLab Software Application.

The resulting model however is far from complete if it is to be used within a real-time engine as the mesh will have too high a polygon count, which causes problems in such engines as well as containing errors and triangular polygons, which also are not ideal for optimum performance in a real-time engine. This means that the surface model from 3dSlicer needs to be tidied up and re-meshed for our intended use.

The segmented surface model is imported into ‘MeshLab’ again, an open source application available at meshlab.sourceforge.net/ where primary adjustments are made to the mesh such as removing isolated vertices and lowering the poly count.

After the model has undergone primary tidying in MeshLab it is exported as an obj. File which can then be imported into Zbrush where it undergoes further repairs and re-topology using different methods such as Zremesher and dynamesh.

The low polygon repaired mesh is then ‘unwrapped’ in 3ds max (a process that allows for the projection of a 2d image onto a 3d model for texturing and the creation of normal maps). High level detail is then sculpted onto the model in zbrush and a normal map is created in xnormal to show the details on the low poly model.

Figure 1.3 (left): Preparing the UV Tiles for Texturing

Next, texture maps are painted in photoshop. The different maps created are applied to the low poly unwrapped model allowing the model to have optimum performance in a real-time engine.

Detail lost in the process of re-topology and mesh cleaning is sculpted in zbrush.

Figure 1.4 (right): Sculpting detail in the ZBrush Software Application.